Stable Diffusion LoRA Model, A First Try

After the craziest March in the recent history of AI development, everyone has witnessed the powerful capabilities of generative AI. In the field of computer vision, there have been countless AIGC (AI-generated content) methods and tools, such as GigaGAN, Stable Diffusion, DALL·E2, MidJourney, LoRA, and many more, whose results are stunning and awe-inspiring.

I had planned to write a technical report, sort-out and studying generative models from the perspective of probability theory. With a rigorous attitude, I intended to first experiment with the capabilities of these new technologies before writing this note. However, things spiraled out of control, and even though I had already played around with things like StyleTransfer 8 years ago, I still found them extremely fascinating.

I spent some time training a Stable Diffusion RoLA model(civitai link), this post will demonstrate some technical details.

LoRA: Low-rank Adaptation for Fast Text-to-Image Diffusion Fine-tuning

Many people like to fine-tune stable diffusion models to fit their needs and generate higher fidelity images. However, the fine-tuning process is very slow, and finding a good balance between the number of steps and the quality of the results is not easy. Furthermore, the final output of a fully fine-tuned model is very large. Some people use textual inversion as an alternative method, but clearly this is not optimal: textual inversion only creates a small word embedding, resulting in a final image that is not as good as that produced by a fully fine-tuned model.

As the results, LoRA came out. LoRA stands for Low-Rank Adaptation, a mathematical technique to deal with the problem of fine-tuning LLMs and reduce the number of parameters that are trained. The idea is very simple. Just like ResNet, training residuals instead of the model itself, which is indeed the work of Microsoft Research. By applying LoRA to cross-attention layers, it links image representations with prompts describing them.

The Self-trained Model: Some Details

This LoRA model is trained by a non-NSFW subset of the photobook of the famous cosplayer Chunmomo(蠢沫沫). So it won’t work well with NSFW prompts.

I manually selected 96 face images and 259 bust/full body images, as shown in the figure below. The selection of face concept set is to use images with no occlusion and a single background as much as possible.

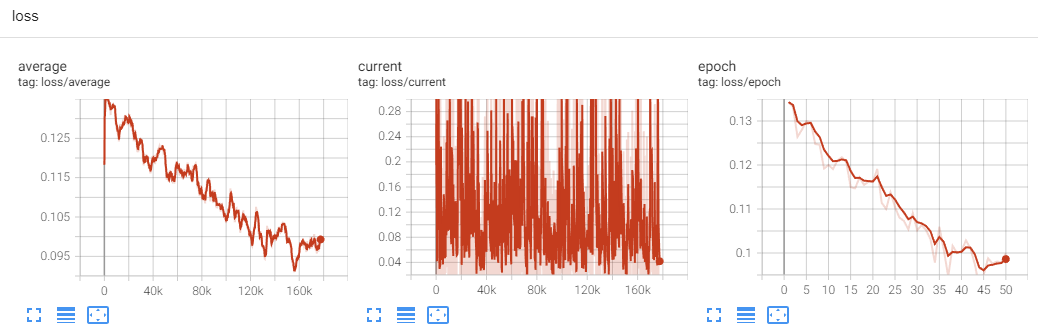

The training process has gone through 50 epochs, and each concept have trained 10 times. Image resolution 512x768, batch size 1(RTX 2080 8GB).

After 19.5 hours of waiting, I finally got the LoRA model.

Some Generated Image Results

Important Statement

This model is for academic research use only, commercial use is strictly prohibited. Use of this model to generate NSFW content, as well as any use that compromises individual rights and privacy is prohibited.